Most new insurance sales agents quit within two years.

Imagine you just got a job as a sales agent in a life insurance company. You may have some simple questions to help you score early wins:

- What are the key features of our premium life insurance policy?

- Customer is concerned about the premium cost. What’s the best way I can address this?

- What should I highlight when pitching a life insurance policy to a middle-aged customer with two children?

- What are the differences between our term life and whole life insurance policies?

- Prioritize leads for me to follow-up.

You hesitate to ask your boss and don’t want to seem like too much of a novice to your co-workers. So, who’re you going to ask?

If you’re fortunate to work at a tech-savvy insurer, your company has already built an internal tool to answer your questions and help improve your productivity. Let’s (hypothetically) call your company’s tool Fiducia.

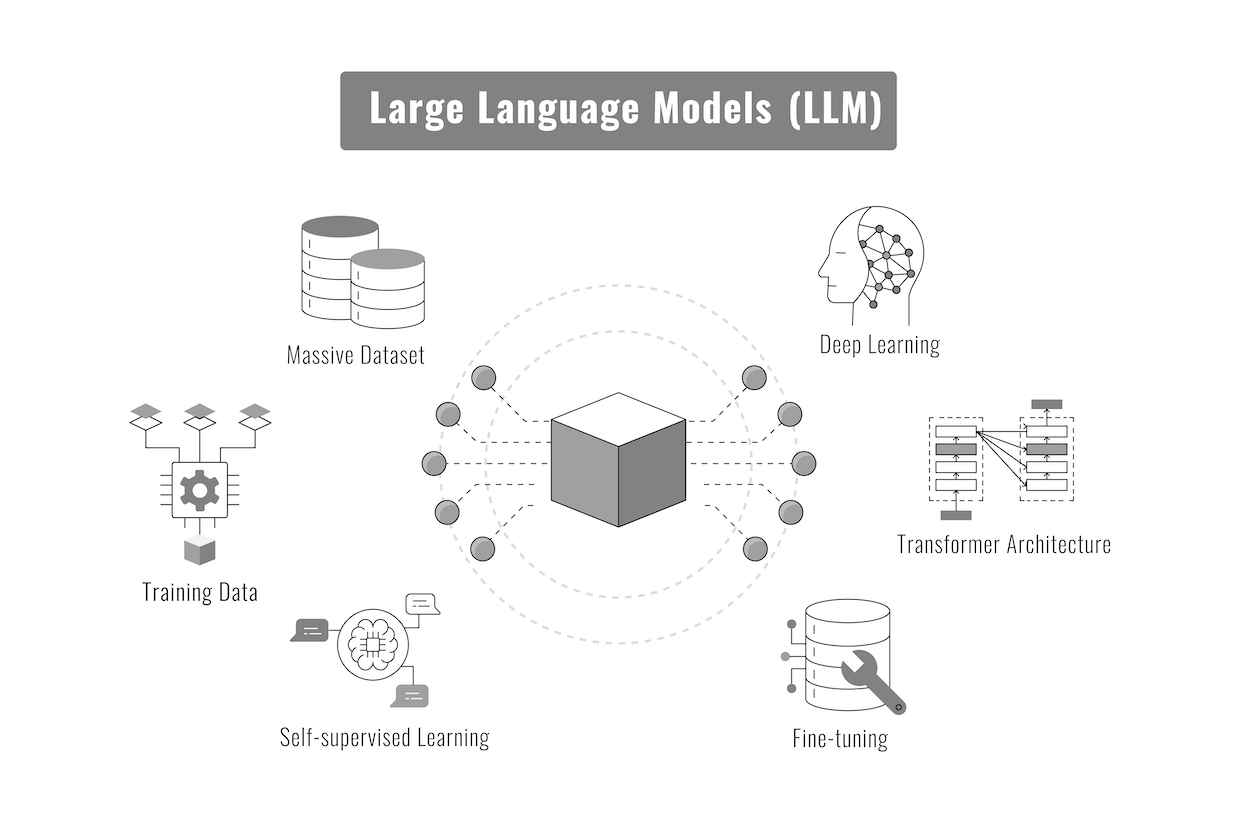

The tool would work much like ChatGPT and is therefore what the industry calls a Large Language Model (LLM).

Yet, there’s one big (actually colossal) difference between Fiducia and ChatGPT.

Fiducia would be a domain-specific LLM, whereas ChatGPT is a general-purpose model. This means that Fiducia is is a specialized model created to answer specific queries based on set guidelines and rules of your company.

To develop Fiducia, your company’s internal data sources (e.g., policy documents, customer interactions, claims records) and external sources (e.g., industry guidelines, legal regulations) have been carefully curated and fed into the structure of Fiducia. In AI parlance, Fiducia has been trained to understand all issues relating to customer support, claims processing, underwriting, policy management, or lead generation.

Fiducia at work

To get an idea of how Fiducia would reply to a sales agent’s query, consider the following QA examples:

- You: What should I highlight when pitching a life insurance policy to a middle-aged customer with two children?

-

Fiducia: Emphasize the financial security and peace of mind that life insurance provides. Highlight the benefits of protecting their children’s future, covering educational expenses, and providing for their spouse in case of an untimely event. Discuss options for term and whole life insurance, and mention any available riders for critical illness or disability.

- You: What are the key features of our premium life insurance policy?

- Fiducia: Our premium life insurance policy offers lifetime coverage with guaranteed level premiums, cash value growth, and flexible payment options. It includes additional riders for critical illness and disability, tax-deferred cash value accumulation, and estate planning benefits. You can convert a term policy to a permanent one without extra underwriting, and our dedicated support team is available for all your policy management needs.

Fiducia: under the hood

Tech enablers of an LLM

A key technology enabler in the development and operation of Fiducia would be Retrieval-Augmented Generation, or RAG for short. RAG ensures that Fiducia stays up-to-date when things (rules, policies, data) change, as they always do.

Fiducia, can be represented as a combination of a domain-specific language model and RAG technology.

Let \( \mathcal{D} \) be the domain of life insurance. Define Fiducia as follows:

Model Definition

\[ \text{Fiducia} = \text{LLM}_{\mathcal{D}} + \text{RAG} \]

where \( \text{LLM}_{\mathcal{D}} \) is the domain-specific Large Language Model for life insurance and \( \text{RAG} \) is the Retrieval-Augmented Generation component.

Training Data

Let \( \mathcal{T} = {(x_i, y_i)}_{i=1}^n \) be the set of training samples, where \( x_i \) are input data points (e.g., customer queries, policy details) and \( y_i \) are the corresponding outputs (e.g., responses, recommendations).

Supervised Learning

The model is trained using supervised learning:

\[\text{LLM}_{\mathcal{D}} = \arg\min_{\theta} \sum_{i=1}^n \mathcal{L}(\text{LLM}_{\theta}(x_i), y_i)\]where \( \theta \) represents the model parameters and \( \mathcal{L} \) is the loss function.

RAG

Define the RAG mechanism as:

\[ \text{RAG}(x) = \text{Gen}(x, \text{Ret}(x)) \]

where

-

\(\text{Ret}(x)\) is the retrieval function that fetches relevant documents \(\{d_j\}_{j=1}^m\) from a knowledge base \( \mathcal{K} \) given an input \( x \),

-

\(\text{Gen}(x, \{d_j\}_{j=1}^m)\) is the generation function that produces the final output using both the input \(x\) and the retrieved documents \(\{d_j\}_{j=1}^m\).

Role of RAG in Fiducia

The RAG technology plays a crucial role in Fiducia by enhancing its performance and accuracy in the following ways:

Contextual Retrieval

\[ \text{Ret}(x) = {d_j}_{j=1}^m \text{ such that } d_j \in \mathcal{K} \text{ and } \text{sim}(x, d_j) \text{ is maximized} \] where \( \text{sim} \) is a similarity function that measures the relevance of document \( d_j \) to the input \( x \).

Augmented Generation

\[\text{Gen}(x, \{d_j\}_{j=1}^m) = \text{LLM}_{\mathcal{D}}(x \cup \{d_j\}_{j=1}^m)\]where the generation function incorporates the retrieved documents to produce a more accurate and contextually relevant output.

Error Minimization

By integrating relevant documents, RAG reduces the error in the generated response:

\[ \min \mathcal{L}(\text{Gen}(x, \text{Ret}(x)), y) \]

The RAG component plays a central role in retrieving contextually relevant information and augmenting the model’s generation capabilities, thus ensuring accurate, relevant, and up-to-date responses for life insurance-related tasks.